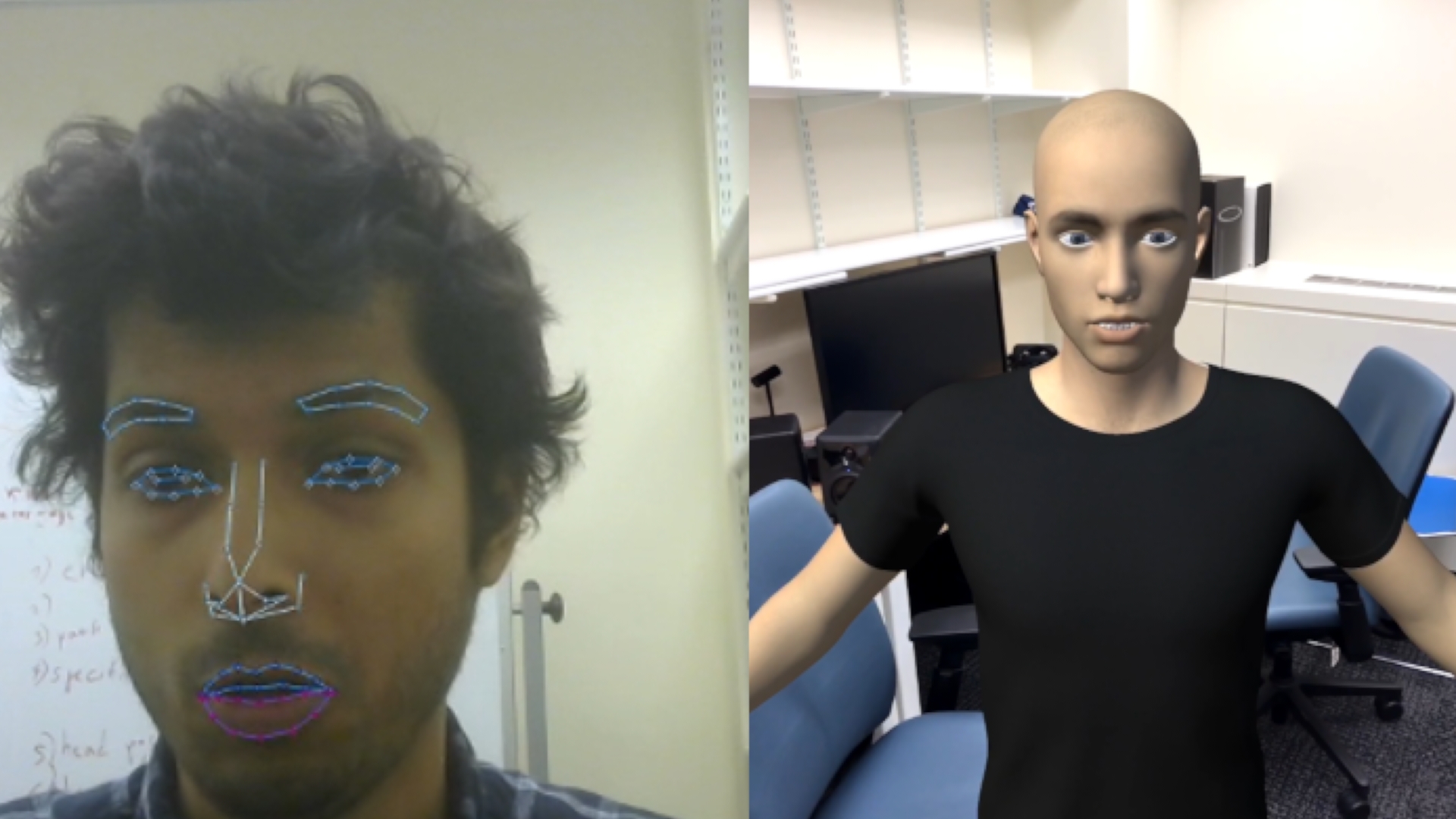

FaceCap

markerless facial animation capture and real-time viseme detection

This was a R&D project to explore the viability of open-source markerless facial animation using both facial landmark recognition from web camera and viseme detection using machine learning algorithms to parse audio and translate into convertible pre-created blendshapes in Maya or Unity.

Github Repository

Architecture

- Responsibilities

- Software Used

- Date